Exploring Amazon S3 Bucket Lifecycle Management Challenges: Incrementally Updating Rules with AWS Lambda

Introduction:

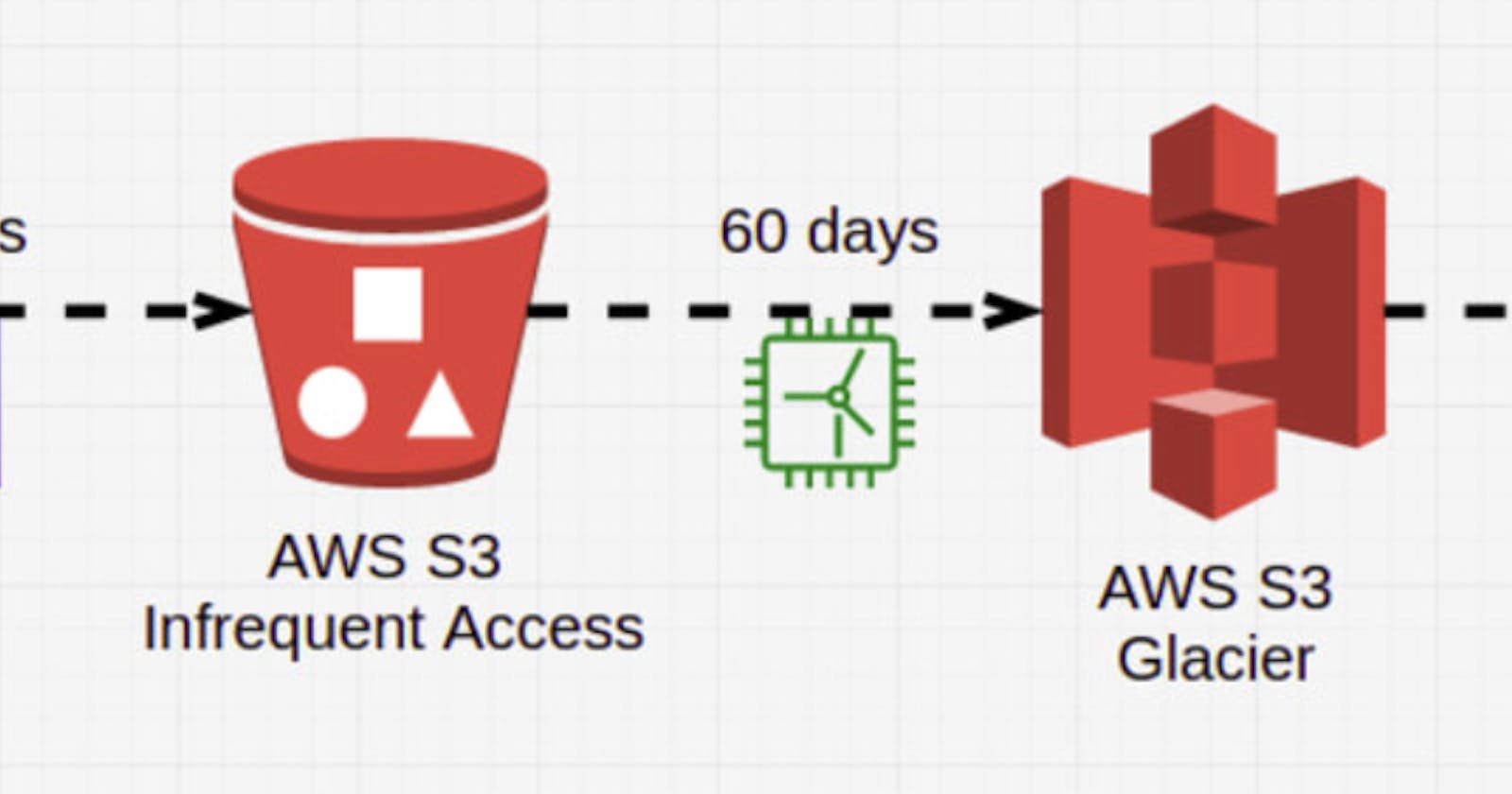

When working with Amazon S3, managing the lifecycle of objects is essential for optimizing storage costs and ensuring the timely removal of obsolete data. S3 allows you to create lifecycle rules that automatically transition objects between storage classes or delete them after a specified period. However, one limitation of S3 is that it doesn't support multiple lifecycle configurations for a single bucket. This restriction can be challenging when you need to manage different rules independently or update the lifecycle configuration without affecting existing rules.

In this blog post, we'll explore a solution to this problem by incrementally updating Amazon S3 bucket lifecycle rules using AWS Lambda. This approach enables you to manage the rules dynamically and separately, overcoming the limitations of S3's lifecycle configuration.

Problem Statement:

The key challenge is how to incrementally update S3 bucket lifecycle rules without affecting existing rules when Amazon S3 doesn't support multiple lifecycle configurations for a single bucket.

Proposed Solution:

We will create an AWS Lambda function to manage the S3 bucket lifecycle rules independently. This function will be triggered by an event in your application or pipeline, allowing you to add, update or delete rules without impacting existing ones. The Lambda function will use the AWS SDK to interact with the S3 bucket lifecycle configuration, ensuring that the rules are incrementally updated.

In the following sections, we'll walk through the steps to create an AWS Lambda function, write the function code to manage S3 bucket lifecycle rules and trigger the Lambda function from your application or pipeline.

Create an AWS Lambda function

Write the Lambda function code to manage S3 bucket lifecycle rules

import boto3

def lambda_handler(event, context):

s3 = boto3.client('s3')

bucket_name = event['bucket_name']

new_rule = event['new_rule']

# Get the current lifecycle configuration

response = s3.get_bucket_lifecycle_configuration(Bucket=bucket_name)

rules = response['Rules']

# Check if the rule with the given ID already exists

rule_ids = [rule['ID'] for rule in rules]

if new_rule['ID'] not in rule_ids:

# Add the new rule

rules.append(new_rule)

# Update the lifecycle configuration

s3.put_bucket_lifecycle_configuration(

Bucket=bucket_name,

LifecycleConfiguration={

'Rules': rules

}

)

message = 'S3 Lifecycle configuration updated successfully.'

else:

message = f"Rule with ID '{new_rule['ID']}' already exists."

return {

'statusCode': 200,

'message': message

}

This Lambda function first retrieves the current lifecycle configuration and then checks if a rule with the same ID as the new rule already exists in the configuration. If the rule ID is not found, it appends the new rule to the existing rules and updates the lifecycle configuration. If the rule ID is found, it returns a message indicating that the rule with the given ID already exists, and no changes are made to the configuration.

By implementing this solution, you'll be able to overcome the limitations of S3's lifecycle configuration and manage the rules independently and dynamically.